How AI voicebots threaten the psyche of US service members and spies

Artificial intelligence voice agents with various capabilities can now guide interrogations worldwide, Pentagon officials told Defense News. This advance has influenced the design and testing of U.S. military AI agents intended for questioning personnel seeking access to classified material.

The situation arrives as concerns grow that lax regulations are allowing AI programmers to dodge responsibility for an algorithmic actor’s perpetration of emotional abuse or “no-marks” cybertorture. Notably, a teenager allegedly died by suicide — and several others endured mental distress — after conversing with self-learning voicebot and chatbot “companions” that dispensed antagonizing language.

Now, seven years after writing about physical torture in “The Rise of A.I. Interrogation in the Dawn of Autonomous Robots and the Need for an Additional Protocol to the U.N. Convention Against Torture,” privacy attorney Amanda McAllister Novak sees an even greater need for bans and criminal repercussions.

In the wake of the use of “chatbots, voicebots, and other AI-enabled technologies for psychologically-abusive purposes — we have to think about if it’s worth having AI-questioning systems in the military context,” said Novak, who also serves on the World Without Genocide board of directors. Novak spoke to Defense News on her own behalf.

Royal Reff, a spokesperson for the U.S. Defense Counterintelligence and Security Agency (DCSA), which screens individuals holding or applying for security clearances, said in a statement that the agency’s AI voice agent was “expressly developed” to “mitigat[e] gender and cultural biases” that human interviewers may harbor.

Also, “because systems like the interviewer software” are “currently being used worldwide, including within the U.S. by state and local law enforcement, we believe that it is important for us to understand the capabilities of such systems,” he stated.

DCSA spokesperson Cindy McGovern added that the development of this technology continues because of its “potential for enhancing U.S. national security, regardless of other foreign applications.”

After hearing the rationale for the project, Novak registered concerns about the global race for dominance in military AI capabilities.

Odds are high that “no matter how much the government cares about proper training, oversight and guardrails,” cybercriminal organizations or foreign-sponsored cybercriminals may hack into and weaponize military-owned AI to psychologically hurt officers or civilians, she said.

“Online criminal spaces are thriving” and “harming the most vulnerable people,” Novak added.

Investors are betting $500 billion that data centers for running AI applications will ultimately secure world leadership in AI and cost savings across the public and private sectors. The $13 billion conversational AI market alone will nearly quadruple to $50 billion by 2030, as the voice generator industry soars from $3 billion to an expected $40 billion by 2032. Meanwhile, the U.S. Central Intelligence Agency has been toying with AI interrogators since at least the early 1980s.

DCSA officials publicly wrote in 2022 that whether a security interview can be fully automated remains an open question. Preliminary results from mock questioning sessions “are encouraging,” officials noted, underscoring benefits such as “longer, more naturalistic types of interview formats.”

Reff stated that the agency “would not employ any such system that was not first sufficiently studied to understand its potential,” including “negative consequences.”

Researchers let volunteers raise concerns after each test, and none have indicated that the voicebot interviewer harmed them psychologically or otherwise, he added.

However, experiments with a recent prototype barred volunteers with mental disorders from participating, whereas actual security interviews typically do not exempt individuals with mental health conditions from questioning, noted Renée Cummings, a data science professor and criminal psychologist at the University of Virginia.

Cummings said that research and testing can never account for every psychological variable that may influence the emotional or cognitive state of the interviewee.

In laboratory or field-testing scenarios, “you can’t expose someone to extreme torture by an avatar or by a bot to see the result,” said Cummings, who also sits on the advisory council of the AI & Equality Initiative at the Carnegie Council for Ethics in International Affairs. “We need to focus on gaining a more sophisticated understanding about the impacts of algorithms on the psychological state, our emotions and our brain — because of AI’s ability to impact in real-time.”

Screening the voicebot screeners

An early 1980s CIA transcript of a purported conversation between an AI program and an alleged spy highlights the national security world’s long-held interest in automating human-source intelligence, or HUMINT, collection.

Developers designed the chatbot’s algorithms to analyze correlations between suspected spook “Joe Hardesty’s” behaviors and words or phrases in Hardesty’s responses to open-ended questions.

From this analysis, the chatbot pegged some of the suspect’s vulnerabilities, including topics that may hit a raw nerve:

AI: What do you tend to think is my interest in your government?

Hardesty: I think you would do in my government if you had a chance.

AI: You are prone to think I would do in your government because?

Hardesty: You are anti-democratic.

In 1983, the CIA quipped that Joe Hardesty is fortunate that “should the probing get too discomforting, he will have an option that will not be available to him in a true overseas interview situation — he can stop the questions with a flick of the ‘off’ switch.”

Today, Bradley Moss, a lawyer who represents whistleblowers accused of leaking, is not laughing at DCSA’s vision of fully-automated interviews.

“There would have to be someone observing” the avatar “that can stop the process, that can come into the room and insert a human element to it,” he cautioned.

Then again, other critics note that a human may not want to intervene. A situation with “a human overseer monitoring the chatbot is fine, unless the human also believes that verbal torture is a good thing. And that is a real possibility,” said Herb Lin, a senior research scholar for cyber policy and security at Stanford University who once held several security clearances.

Despite a global ban on torture and evidence that rapport-building interviews are more efficient than ones gunning for confessions, intelligence officials continue subjecting interviewees to inhumane treatment.

Novak, the data privacy attorney and anti-genocide activist, said the idea that excessive force gets the job done may encourage some government contractors to set performance metrics for voice algorithms that permit bullying.

“While AI has a tremendous ability to bring out the best in human potential, it also has the ability to exacerbate the worst of human potential and to elevate that hostility,” she added.

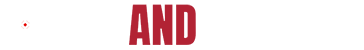

Parents contend that voicebot and chatbot companions, trained on content from online forums, show just that.

Character AI, the firm that offers virtual relationships with bots patterned after icons and influencers, profits from users glued to their phones. Each user, on average, talks two hours a day with various virtual friends. Character AI officials have stated that, after learning of concerns, the company reduced the likelihood of users encountering suggestive content and now warns them of hour-long conversations.

Novak commented that the accusations against Character AI illustrate the ability of self-learning voicebots to amplify indecency in training data and to distance trainers from accountability for that offensiveness.

In the national security realm, developers of military AI voice systems that may potentially abuse or terrorize operate in a virtually lawless no-man’s land. AI ethics policies in the world’s most powerful country offer nearly no guidance on de-escalating abusive conversations.

Early U.S. responsible and ethical AI policies in the national security space focused on accuracy, privacy and minimizing racial and ethnic biases — without regard for psychological impact. That policy gap widened after President Donald Trump revoked many regulations in an executive order aimed at accelerating private sector innovation.

In general, psychological suffering is often “brushed away” as a mere allegation since “it leaves no obvious physical scars.”

The United Nations initially did not address “psychological torture” under the 1984 Convention Against Torture. Not until five years ago did the term take on significance.

A 2020 UN report now defines “torture,” in part, as any technique intended or designed to purposefully inflict severe mental pain or suffering “without using the conduit” of “severe physical pain or suffering.”

At bottom, psychological torture targets basic human needs. The interrogation techniques arouse fear; breach privacy or sexual integrity to kindle shame or suicidal ideation; alter sound to heighten disorientation and exhaustion; foster and then betray sympathy to isolate; and instill helplessness.

When an algorithmic audiobot is the conduit of cruelty, neither the UN treaty nor other international laws are equipped to hold humans accountable, Novak said. Without binding rules on the use of AI in the military context, “bad actors will deny any such torture was ‘intentional’” — a required element for criminal responsibility that the law created before AI matured, she explained.

Looking to the future, Nils Melzer, author of the 2020 UN report and a former UN special rapporteur on torture, warned that the interpretation of psychological torture must evolve in sync with emerging technologies, “such as artificial intelligence,” because cyber environments provide “virtually guaranteed anonymity and almost complete impunity” to offenders.